Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Semi-obligatory thanks to @dgerard for starting this)

one of OpenAI’s cofounders wrote some thoroughly unhinged shit about the company’s recent departures

Thank you, guys, for being my team and my co-workers. With each of you, I have collected cool memories — with Barret, when we had a fierce conflict about compute for what later became o1; with Bob, when he reprimanded me for doing a jacuzzi with a coworker; and with Mira, who witnessed my engagement.

I am in awe of the sheer number of GPUs… whose lives ChatGPT has changed.

If it was just this one line, this would be in the top 10 funniest things ever written around genAI. Too bad the rest of the rambling insanity ruins it.

I can’t be the only one reading that super passive aggressively right? “Thank you Barret, whom I hated. Bob, for ruining my hot Jacuzzi date. And Mira, for existing.”

right with you on team passive aggressive.

Fun fact: All of these incidents happened at the same jacuzzi party.

I heard it was the same 50 parties, over and over

(or, well, the same party, x50…)

lmao this is weird as fuck, reminds me of the bullshit Lex Fridman comes up with, I can totally imagine him saying things like this

love a good second paragraph jumpscare

I am sure this is totally not sketchy in the slightest and the people behind it have no nefarious agenda whatsoever.

fuck that’s gross

where’d you find/run across that? can’t tell if it’s a normal ad or some gig-site thing or what

Saw it posted on Reddit. It’s apparently from clickworker.com which is a weird-ass website by itself at first glance, with great pitches like this:

People are happier if they are more financially independent. We can help you achieve this.

ah, doman name sounds like it’s one of the microwork farms

Ah yes the advertisement which causes T&S people (who have seen some things) to go on long rants on why you should never put pictures of your kids online publically.

Found a good one in the wild

Didn’t you know LLM stood for Limited Liability Machine

A lobsters states the following in regard to LLMs being used in medical diagnoses:

If you have very unusual symptoms, for example, there’s a higher chance that the LLM will determine that they are outside of the probability space allowed and replace them with something more common.

Another one opines:

Don’t humans and in particular doctors do precisely that? This may be anecdotal, but I know countless stories of people being misdiagnosed because doctors just assumed the cause to be the most common thing they diagnose. It is not obvious to me that LLMs exhibit this particular misjudgement more than humans. In fact, it is likely that LLMs know rare diseases and symptoms much better than human doctors. LLMs also have way more time to listen and think.

<Babbage about UK parlaimentarians.gif>

nothing hits worse than an able-bodied techbro imagining what medical care must be like for someone who needs it. here, let me save you from the possibility of misdiagnosis by building and mandating the use of the misdiagnosis machine

Also please fill in the obligatory rant about how LLMs don’t actually know any diseases or symptoms. Like, if your training data was collected before 2020 you wouldn’t have a single COVID case, but if you started collecting in 2020 you’d have a system that spat out COVID to a disproportionately large fraction of respiratory symptoms (and probably several tummy aches and broken arms too, just for good measure).

Not a sneer, but some truly beautiful karma:

Judge to approve auctions liquidating Alex Jones’ Infowars to help pay Sandy Hook families

Turns out trump really does understand cryptocurrency perfectly. Who’d have thought? Some folk seems surprisingly unhappy about this, though.

Maybe we’ll pay off the $35 trillion US debt in Crypto. I’ll write on a little piece of paper ‘$35T crypto we have no debt.’ That’s what I like.

The question (which doesn’t matter) now is, does he really understand crypto? Or did he get at the right conclusion because he thinks that everybody else, like him, is just scamming all the time?

Given the kinds of crowds he hangs out with (i.e. mostly other rich people and political elite) is that not an understandable conclusion?

anything that makes yglesias have a bad day is generally a good thing

but it sounds like the orange man understands the crypto market perfectly: the numbers are all made up and everyone’s lying

(at least I think it’s that one? one of them’s quite the bootlicker. I’m bad at names tho)

I mean there are definitely some brain rotted crypto bros who would buy shares at face value because it’s totally gonna go to the moon guys

I might not be a huge fan of his work, but I’ll take it any day over AI slop. At least it’s a creative vision.

You don’t have to agree with someone to recognize that they care.

Do you think when the Trumps get paperclipped it will look something like this?

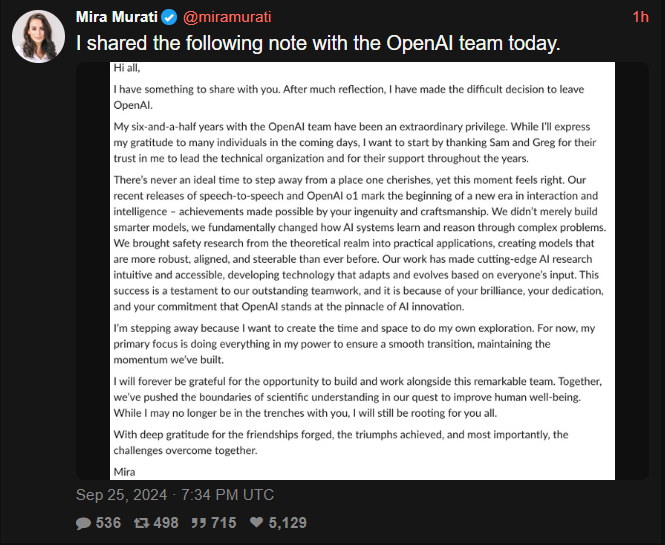

This was the woman who took over during Sam Altman’s temporary removal as CEO, which we’re pretty sure happened because the AI doom cultists weren’t satisfied that Altman was enough of an AI doom cultist.

Yudkowsky was solidly in favor of her ascension. I take no joy in saying this as someone who wants this AI nonsense to stop soon, but OpenAI is probably better off financially with fewer AI doom cultists in high positions.

Do you think they still say all that bullshit even when they’re not screenshooting it for twitter? Probably, right

hundo p

Inventor sez “I locked myself in my apartment for 4 years to build this humanoid”. Surprisingly, not a sexbot!

not a sexbot!

Skill issue.

You know that’s for Gen 2.0

This tech curve is about to go exponential, if you know what I’m sayin’

–venture capitalists, probably

all hail the hockey stick may we forever outspend all competition and reap the rewards of a ravaged market we solely control

Surprisingly, not a sexbot!

Well, not with that attitude

Surprisingly, not a sexbot!

would be a good album name

also series potential in there

Surprisingly (not), a sexbot!

Saw an unexpected Animatrix reference on Twitter today - and from an unrepentant promptfondler, no less:

This ended up starting a lengthy argument with an “AI researcher” (read: promptfondler with delusions of intelligence), which you can read if you wanna torture yourself.

yes. that’s all true, but academics and artists and leftists are actually calling for Buttlerian jihad all the time. when push comes to shove they will ally with fascists on AI

This guy severely underestimates my capacity for being against multiple things at the same time.

The type of guy who was totally convinced by the ‘but what if the AI needs to generate slurs to stop the nukes?’ argument.

Guy invented a new way to misinterpret the matrix, nice. Was getting tired of all the pilltalk

Not a sneer, but

KendrickEd Zitron just dropped.Its damn good as usual, with Zitron taking aim at the current state of SaaS and tying it into his previous sneers on AI.

Can we get a universe where Ed writes a verse on Kendrick’s hopefully-imminent Elon Musk dis track?

ya know that inane SITUATIONAL AWARENESS paper that the ex-OpenAI guy posted, which is basically a window into what the most fully committed OpenAI doom cultists actually believe?

yeah, Ivanka Trump just tweeted it

But right now, there are perhaps a few hundred people, most of them in San Francisco and the AI labs, that have situational awareness. Through whatever peculiar forces of fate, I have found myself amongst them.

oh boy

From that blog post:

You can see the future first in San Francisco.

“And that, I think, was the handle - that sense of inevitable victory over the forces of old and evil. Not in any mean or military sense; we didn’t need that. Our energy would simply prevail. We had all the momentum; we were riding the crest of a high and beautiful wave. So now, less than five years later, you can go up on a steep hill in Las Vegas and look west, and with the right kind of eyes you can almost see the high-water mark - that place where the wave finally broke and rolled back.”

barron: shows ivanka the minecraft speedrun

ivanka: I HAVE SEEN THE LIGHT, BROTHER

edit: accidentally read ivanka as melania, now corrected

Personally, I was radicalized by ‘watch for rolling rocks’ in .5 A presses

parallel universes were the inspiration behind urbit, it all makes sense

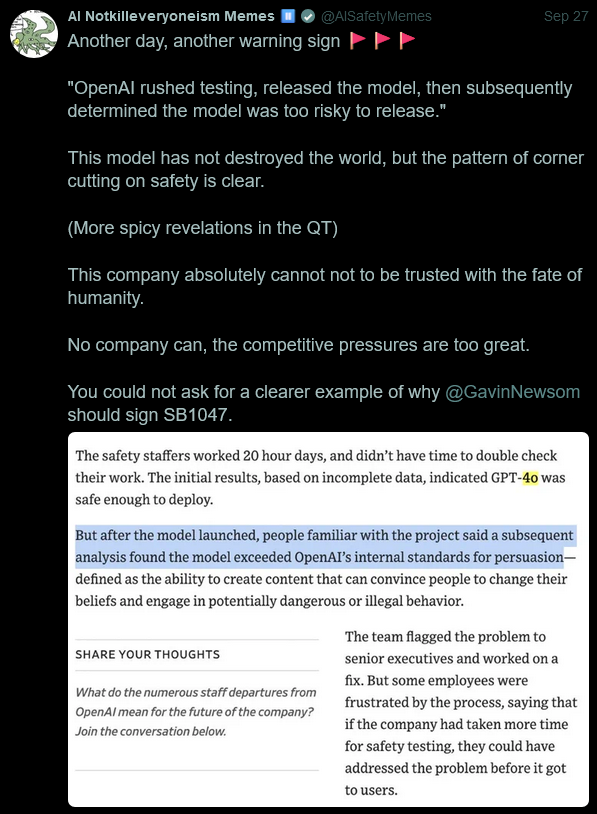

I vaguely remember mentioning this AI doomer before, but I ended up seeing him openly stating his support for SB 1047 whilst quote-tweeting a guy talking about OpenAI’s current shitshow:

I’ve had this take multiple times before, but now I feel pretty convinced the “AI doom/AI safety” criti-hype is going to end up being a major double-edged sword for the AI industry.

The industry’s publicly and repeatedly hyped up this idea that they’re developing something so advanced/so intelligent that it could potentially cause humanity to get turned into paperclips if something went wrong. Whilst they’ve succeeded in getting a lot of people to buy this idea, they’re now facing the problem that people don’t trust them to use their supposedly world-ending tech responsibly.

it’s easy to imagine a world where the people working on AI that are also convinced about AI safety decide to shun OpenAI for actions like this. It’s also easy to imagine that OpenAI finds some way to convince their feeble, gullible minds to stay and in fact work twice as hard. My pitch: just tell them GPT X is showing signs of basilisk nature and it’s too late to leave the data mines

Isn’t the primary reason why people are so powerful persuaded by this technology, because they’re constantly sworn to that if they don’t use its answers they will have their life’s work and dignity removed from them? Like how many are in the control group where they persuade people with a gun to their head?

People are “blatantly stealing my work,” AI artist complains

When Jason Allen submitted his bombastically named Théâtre D’opéra Spatial to the US Copyright Office, they weren’t so easily fooled as the judges back in Colorado. It was decided that the image could not be copyrighted in its entirety because, as an AI-generated image, it lacked the essential element of “human authorship". The office decided that, at best, Allen could copyright specific parts of the piece that he worked on himself in Photoshop.

“The Copyright Office’s refusal to register Theatre D’Opera Spatial has put me in a terrible position, with no recourse against others who are blatantly and repeatedly stealing my work without compensation or credit.” If something about that argument rings strangely familiar, it might be due to the various groups of artists suing the developers of AI image generators for using their work as training data without permission.

and now when somebody will generate exactly the same thing, it won’t be new stuff either el reg: Hipster whines at tech mag for using his pic to imply hipsters look the same, discovers pic was of an entirely different hipster

Appropriately for this sort of meaningless bilge, the name is also bullshit. The way to say “space opera” in French is “space opera”.

“Space opera’s the same, but they call it le space opera.”

It’s been a long time since I lived in France, so my sense of what is idiomatic has no doubt grown rusty, but “Théâtre D’opéra” doesn’t sound right. The word “Théâtre” doesn’t belong in a reference to the place where operas are performed. It’s “L’opéra Garnier” and “L’opéra Bastille” in Paris and “L’opéra Nouvel” in Lyon, for example. I’d read “théâtre d’opéra” as more like “operatic theatre” in the sense of a genre (contrasted with, e.g., spoken-word theatre). I could be completely wrong here, but the title feels like a naive machine translation.

That’s absolutely right.