Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Semi-obligatory thanks to @dgerard for starting this)

so mozilla decided to take the piss while begging for $10 donations:

We know $10 USD may not seem like enough to reclaim the internet and take on irresponsible tech companies. But the truth is that as you read this email, hundreds of Mozilla supporters worldwide are making donations. And when each one of us contributes what we can, all those donations add up fast.

With the rise of AI and continued threats to online privacy, the stakes of our movement have never been higher. And supporters like you are the reason why Mozilla is in a strong position to take on these challenges and transform the future of the internet.

the rise of AI you say! wow that sounds awful, it’s so good Mozilla isn’t very recently notorious for pushing that exact thing on their users without their consent alongside other privacy-violating changes. what a responsible tech company!

upside of this: they’ll get told why they’re not getting many of those $10 donations

downside of that (rejection): that could be exactly what one of the ghouls-in-chief there need to push some or other bullshit

the ability of Mozilla’s executives and PMs to ignore public outcry is incredible, but not exactly unexpected from a thoroughly corrupt non-profit

could revitalise the ivory trade by mining these towers

(/s, about the trade bit)

We know $10 USD may not seem like enough to reclaim the internet with the browser we barely maintain and take on irresponsible tech companies that pay us vast sums of money. But the truth is that as you read this email, hundreds of Mozilla supporters worldwide haven’t realized we’re a charity racket dressed up as a browser who will spend all your money on AI and questionable browser plugins. And when each one of us contributes what we can, we can waste the money all the faster!

With the rise of AI (you’re welcome, by the way, for the MDN AI assistant) and continued threats to online privacy like question like integrating a Mr. Robot Ad into firefox without proper code review, the stakes of our movement have never been higher. And

markssupporters like you are the reason why Mozilla is in such a strong position to take on these challenges and transform the future of the internet in any way we know how – except by improving our browser of course that would be silly.(I’m feeling extra cynical today)

is this what gaslighting is?

Gaslighting? What are you talking about? There’s no such thing as gaslighting. Maybe you’re going crazy

Timnit Gebru on Twitter:

We received feedback from a grant application that included “While your impact metrics & thoughtful approach to addressing systemic issues in AI are impressive, some reviewers noted the inherent risks of navigating this space without alignment with larger corporate players,”

navigating this space without alignment with larger corporate players

stares into middle distance, hollow laugh

No need for xcancel, Gebru is on actually social media: https://dair-community.social/@timnitGebru/113160285088058319

Paul Krugman and Francis Fukuyama and Daniel Dennett and Steve Pinker were in a “human biodiversity discussion group” with Steve Sailer and Ron Unz in 1999, because of course they were

I look forward to the ‘but we often disagreed’ non-apologies. With absolute lack of self reflection on how this helped push Sailer/Unz into the positions they are now. If we even get that.

Pinker: looking through my photo album where I’m with people like Krauss and Epstein, shaking my head the whole time so the people on the bus know I disagree with them

Also John McCarthy and Ray fucking Blanchard

Mr AGP? Wow.

Who could have predicted that liberalism would lead into scientific racism and then everything else that follows (mostly fascism)???

Surely “scientific” is giving them far too much credit? I recall previously sneering at some quotes about skull sizes, including something like women keep bonking their heads?

I believe the term is not so much meant to convey properties of science upon them as to describe the particular strain of racist shitbaggery (which dresses itself in appears-science, much like what happens in/with scientism)

Oh, definitely. For clarity my intention was to riff off them and increase levels of disrespect towards racists. In hindsight, the question format doesn’t quite convey that.

I’m mildly surprised at Krugman, since I never got a particularly racist vibe from him. (This is 100% an invitation to be corrected.) Annoyed that 1) I recognise so many names and 2) so many of the people involved are still influential.

Interested in why Johnathan Marks is there though. He’s been pretty anti-scientific racism if memory serves. I think he’s even complained about how white supremacists stole the term human biodiversity. Now, I’m curious about the deep history of this group. Marks published his book in 1995 and this is a list from 1999, so was the transformation of the term into a racist euphemism already complete by then? Or is this discussion group more towards the beginning.

Similarly, curious how out some of these people were at the time. E.g. I know that Harpending was seen as a pretty respectable anthropologist up until recently, despite his virulent racism. But I’ve never been able to figure out how much his earlier racism was covert vs. how much 1970s anthropology accepted racism vs. how much this reflects his personal connections with key people in the early field of hunter-gatherer studies.

Oh also, super amused that Pinker and MacDonald are in the group at the same time, since I’m pretty sure Pinker denounced MacDonald for anti-Semitism in quite harsh language (which I haven’t seen mirrored when it comes to anti-black racism). MacDonald’s another weird one. He defended Irving when Irving was trying to silence Lipstadt, but in Evan’s account, while he disagrees with MacDonald, he doesn’t emphasise that MacDonald is a raging anti-Semite and white supremacist. So, once again, interested in how covert vs. overt MacDonald was at the time.

Yeah, Krugman appearing on the roster surprised me too. While I haven’t pored over everything he’s blogged and microblogged, he hasn’t sent up red flags that I recall. E.g., here he is in 2009:

Oh, Kay. Greg Mankiw looks at a graph showing that children of high-income families do better on tests, and suggests that it’s largely about inherited talent: smart people make lots of money, and also have smart kids.

But, you know, there’s lots of evidence that there’s more to it than that. For example: students with low test scores from high-income families are slightly more likely to finish college than students with high test scores from low-income families.

It’s comforting to think that we live in a meritocracy. But we don’t.

There are many negative things you can say about Paul Ryan, chairman of the House Budget Committee and the G.O.P.’s de facto intellectual leader. But you have to admit that he’s a very articulate guy, an expert at sounding as if he knows what he’s talking about.

So it’s comical, in a way, to see [Paul] Ryan trying to explain away some recent remarks in which he attributed persistent poverty to a “culture, in our inner cities in particular, of men not working and just generations of men not even thinking about working.” He was, he says, simply being “inarticulate.” How could anyone suggest that it was a racial dog-whistle? Why, he even cited the work of serious scholars — people like Charles Murray, most famous for arguing that blacks are genetically inferior to whites. Oh, wait.

I suppose it’s possible that he was invited to an e-mail list in the late '90s and never bothered to unsubscribe, or something like that.

I thought that Sailer had coined the term in the early 2000s, but evidently that’s not correct

The Wikipedia article on the Human Biodiversity Institute cites the term human biodiversity as becoming a euphemism for racism sometime in the late 90s and Marks’ book is from 1995, so there was apparently a pretty quick turnover. Which makes me wonder if hijacking or if independent invention. The article has a lot of sources, so I might mine them to see if there’s a detailed timeline.

This quote flashbanged me a little

When you describe your symptoms to a doctor, and that doctor needs to form a diagnosis on what disease or ailment that is, that’s a next word prediction task. When choosing appropriate treatment options for said ailment, that’s also a next word prediction task.

From this thread: https://www.reddit.com/r/gamedev/comments/1fkn0aw/chatgpt_is_still_very_far_away_from_making_a/lnx8k9l/

None of these fucking goblins have learned that analogies aren’t equivalences!!! They break down!!! Auuuuuuugggggaaaaaaarghhhh!!!

The problem is that there could be any number of possible next words, and the available results suggest that the appropriate context isn’t covered in the statistical relationships between prior words for anything but the most trivial of tasks i.e. automating the writing and parsing of emails that nobody ever wanted to read in the first place.

This is just standard promptfondler false equivalence: “when people (including me) speak, they just select the next most likely token, just like an LLM”

Instead of improving LLMs, they are working backwards to prove that all other things are actually word prediction tasks. It is so annoying and also quite dumb. No chemisty isn’t like coding/legos. The law isn’t invalid because it doesn’t have gold fringes and you use magical words.

Behind the Bastards is starting a series about Yarvin today. Always appreciate it when they wander into our bailiwick!

Also means we’re likely to have a better jumping on point to explain these people to those who aren’t already here. Hope he does one on Yud and friends in the not too distant future.

They did come up in the Tech Bros Have Built a Cult Around AI episode.

Their episode on Rudolph Steiner was great when explaining to the grandparents why we had to pull our kids out of a Waldorf kindergarten asap. Funny how so many things fall into the trap of “It can’t be that stupid, you must be explaining it wrong.”

Also, big L for me on due diligence. I thought outdoor classrooms would be good for our fellow ADHD enjoyer; nope.

They did do one on Yud, it’s hard to find and has an annoying amount of side chatter but it’s a pretty solid breakdown of the dude.

The episode is mentioned here: https://shatterzone.substack.com/p/rationalist-harry-potter-and-the

But I can no longer find it on YouTube.

Orange site on pager bombs in Lebanon:

If we try to do what we are best at here at HN, let’s focus the discussion on the technical aspects of it.

It immediately reminded me of Stuxnet, which also from a technical perspective was quite interesting.

technical aspect seems to be for now that israeli secret services intercepted and sabotaged thousands of pagers to be distributed for hezbollah operatives, then blew them up all at once. it does look like small, reportedly less than 20g each explosive charge, but orange site accepted truth is that it was haxxorz blowing up lithium batteries. israelis already did exactly this thing but with phone in targeted assassination, and actual volume of such bomb would be tiny (about 10ml)

what we are best at here at HN

It’s always bootlicking with this crowd jfc

“best at”, they say? I shall have to update my priors

If HN is best at technical discussion that just means they’re even worse at everything else!

They suck at technical explanations too, unless it’s a Wikipedia link.

My joke didn’t land apparently but I did not mean to imply they were particularly good at technical explanations. Adjusted the working a smidge.

fuckin. when did Mozilla’s twitter feed turn into wall to fucking wall AI spam https://x.com/mozilla

fucking Mozilla really is going all in on this whole “you can’t trust AI, except when we and our business partners do it” openwashing thing completely unaware of how it looks, huh? like, they’ve pushed AI so hard and violated so much community trust in the process that I can’t imagine this is doing anything but costing them their remaining donors.

who is the investor who pushed Mozilla this hard? where the fuck is this coming from?

all their hiring is AI too

haven’t really had the headspace to dig into this but one of my hypotheticals about how this could come to pass is “not enough counter-friction left”. foundations of the guess are: years of ill-advised products, constant killing of worthwhile projects, creep of bayfucker mentality. that shape of thing

I recall seeing people ringing alarm bells about moz ceo pay like 3~4y ago

not that the above guess eliminates the thing you’re pointing to, mind you. I agree that this drive has to be coming from somewhere. my stuff was more coming at it from the “why has this suddenly accelerated so much” angle

I recall seeing people ringing alarm bells about moz ceo pay like 3~4y ago

remember when bringing up Mozilla’s financials would get you yelled at by people who needed to see them as a paragon of open source in spite of all evidence to the contrary?

my personal theory for why it’s accelerating so much is, their board might be doing a Sears[1]. they’re inventing ways to make Mozilla bankrupt because there’s profit in it, and that profit window might be closing rapidly with the antitrust actions against Google coming up. this is all based on vibes though, I’m the polar opposite of an accountant

[1] see also, doing a Red Lobster. no, endless shrimp isn’t why they’re going bankrupt, why in fuck would it be, of course it’s capitalists

For some reason, the news of Red Lobster’s bankruptcy seems like a long time ago. I would have sworn that I read this story about it before the solar eclipse.

Of course, the actual reasons Red Lobster is circling the drain are more complicated than a runaway shrimp promotion. Business Insider’s Emily Stewart explained the long pattern of bad financial decisions that spelled doom for the restaurant—the worst of all being the divestment of Red Lobster’s property holdings in order to rent them back on punitive leases, adding massive overhead. (As Ray Kroc knows, you’re in the real estate business!) But after talking to many Red Lobster employees over the past month—some of whom were laid off without any notice last week—what I can say with confidence is that the Endless Shrimp deal was hell on earth for the servers, cooks, and bussers who’ve been keeping Red Lobster afloat. They told me the deal was a fitting capstone to an iconic if deeply mediocre chain that’s been drifting out to sea for some time. […] “You had groups coming in expecting to feed their whole family with one order of endless shrimp,” Josie said. “I would get screamed at.” She already had her share of Cheddar Bay Biscuit battle stories, but the shrimp was something else: “It tops any customer service experience I’ve had. Some people are just a different type of stupid, and they all wander into Red Lobster.”

Some people are just a different type of stupid, and they all wander into Red Lobster.

I dated someone who worked at Red Lobster, and that absolutely checks out. the number of people who’d come in hoping to grift free shit and take it out on the servers when they didn’t get it (or would try and get someone fired so they could get free shit, depending on the night) was astounding

remember when bringing up Mozilla’s financials would get you yelled at by people who needed to see them as a paragon of open source in spite of all evidence to the contrary?

yup. absolutely nuts shit. I know there’s often a lament to lack of nuance in contemporary internet but god damn if there isn’t also a massive shortage of critical thinking skills and the ability to engage with criticism well

Oh, and I just saw this: https://mastodon.social/@stevetex/113162099798398758

they’re really speedrunning this downslide, huh

What are the chances that–somewhere deep in the bowels of Clearwater, FL–some poor soul has been ordered to develop an AI replicant of L. Ron Hubbard?

There is a substantial corpus.

the only worthwhile use of LLMs: endlessly prompting the L Ron Hubbard chatbot with Battlefield Earth reviews as a form of acausal torture

look at me i am the basilisk now

How would you audit a computer? Would they add USB-C ports to the cans?

cat /dev/thetans > ~/genius.txt

pours a bag of powdered mdma all over the computer “look at it! it’s drenched in e! PURGE!”

Doesn’t have his body tethans so would be a different person or something.

They’ve had enough problems with the guy who claimed to be the reincarnation of LRH.

I reckon Miscavige wouldn’t want a robo-LRH as it could challenge his power within the organization.

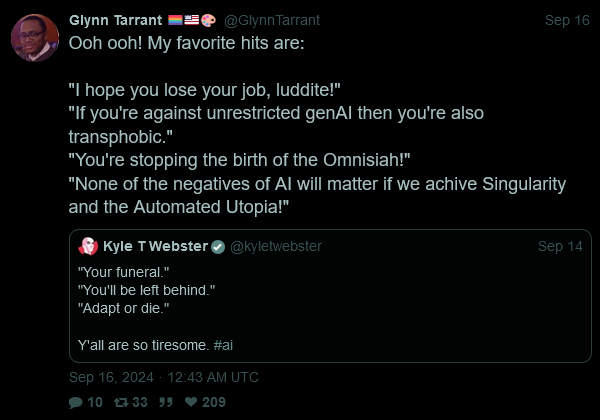

Pulling out a pretty solid Tweet @ai_shame showed me:

To pull out a point I’ve been hammering since Baldur Bjarnason talked about AI’s public image, I fully anticipate tech’s reputation cratering once the AI bubble bursts. Precisely how the public will view the tech industry at large in the aftermath I don’t know, but I’d put good money on them being broadly hostile to it.

If you’re against unrestricted genAI then you’re also transphobic

What. Wait has anyone claimed this? Because that’s absurd.

Dunno but why not, after Nanowrimo claimed that opposing “AI” means you’re classist and ableist. Why not also make objecting be sexist, racist etc. I’m going to be ahead of the curve by predicting that being against ChatGPT will also be a red flag that you’re a narcissistic sociopath manipulator because uhh because abused women need ChatGPT to communicate with their toxic exes /s

Considering how much the AI hype feels like the cryptocurrency hype, during which every joke you made had already been seriously used to make a coin and been pumped and dumped already, I wouldn’t be surprised at all.

Oh, I wonder if they are referring to this shit, where somone came to r/lgbt fishing for compliments for the picture they’d asked Clippy for, and were completely clowned on by the entire community, which then led to another subreddit full of promptfans claiming that artists are transphobic because they didn’t like a generated image which had a trans flag in it.

It warms the removedles of my heart that all across the web people find AI as annoying as I do.

remembering the NFT grifter who loudly asserted that if you weren’t into NFTs then you must be a transphobe

(it was Fucking Thorne)

fondly remembering replying to these types of people with screenshots from the wikipedia page on affinity fraud. they really hated that

I suspect it’ll land somewhere above “halitosis” but below “wearing black socks with crocs”

Follow up for this post from the other day.

Our DSO now greenlit the stupid Copilot integration because “Microsoft said it’s okay” (of course they did), and he also was on some stupid AI convention yesterday and whatever fucking happened there, he’s become a complete AI bro and is now preaching the Gospel of Altman that everyone who’s not using AI will be obsolete in few years and we need to ADAPT OR DIE. It’s the exact same shit CEO is spewing.

He wants an AI that handles data security breaches by itself. He also now writes emails with ChatGPT even though just a week ago he was hating on people who did that. I sat with my fucking mouth open in that meeting and people asked me whether I’m okay (I’m not).

I need to get another job ASAP or I will go clinically insane.

He wants an AI that handles data security breaches by itself. He also now writes emails with ChatGPT

He is the data security breach.

E: Dropped a T. But hey, at least chatgpt uses SSL to communicate, so the databreach is now constrained to the ChatGPT trainingdata. So it isn’t that bad.

I’m so sorry. the tech industry is shockingly good at finding people who are susceptible to conversion like your CEO and DSO and subjecting them to intense propaganda that unfortunately tends to work. for someone lower in the company like your DSO, that’s a conference where they’ll be subjected to induction techniques cribbed from cults and MLM schemes. I don’t know what they do to the executives — I imagine it involves a variety of expensive favors, high levels of intoxication, and a variant of the same techniques yud used — but it works instantly and produces someone who can’t be convinced they’ve been fed a lie until it ends up indisputably losing them a ton of money

Yeah, I assume that’s exactly what happened when CEO went to Silicon Valley to talk to “important people”. Despite being on a course to save money before, he dumped tens of thousands into AI infrastructure which hasn’t delivered anything so far and is suddenly very happy with sending people to AI workshops and conferences.

But I’m only half-surprised. He’s somewhat known for making weird decisions after talking to people who want to sell him something. This time it’s gonna be totally different, of course.

It’s the exact same shit CEO is spewing.

I have realized working at a corporation that a lot of employees will just mindlessly regurgitate the company message. And not in a “I guess this is what we have to work on” way, but as if it replaced whatever worldview they had previously.

Not quite sure what to make of this TBH.

A lemmy-specific coiner today: https://awful.systems/post/2417754

The dilema of charging the users and a solution by integrating blockchain to fediverse

First, there will be a blockchain. There will be these cryptocurrencies:

This guy is speaking like he is in Genesis 1

I guess it would be better that only the instances can own instance-specific coins.

You guess alright? You mean that you have no idea what you’re saying.

if a user on lemmy.ee want to post on lemmy.world, then lemmy.ee have to pay 10 lemmy.world coin to lemmy.world

What will this solve? If 2 people respond to each other’s comments, the instance with the most valuable coin will win. What does that have to do with who caused the interaction?

Yes crypto instances, please all implement this and “disallow” everyone else from interacting with you! I promise we’ll be sad and not secretly happy and that you’ll make lots of money from people wanting to interact with you.

I know I won’t be secretly happy if they do this.

that’s lemm.ee last time i’ve checked. he made that mistake 14x

1 post 6 comments joined 3 months ago, “i’m naive to crypto” “I want to host an instance that serves as a competitive alternative to Facebook/Threads/X to the users in my country,”

yeah he doesn’t even have to charge for interacting with him i’ll avoid him without it

EVE Online creator CCP announces a new game with BLOCKCHAIN and it’s not going well:

Apparently they got investment from A16Z:

I admit, in my haste, I read that link as Marc Andreessen openly announcing they’re investing in the Chinese Communist Party, which is slightly funnier than the reality of yet another crypto game.

it’s even funnier than that (albeit also super depressing, in some ways)

primer: hilmar (the head honcho at ccp) has been “crypto = bae” for going on 5~6y now (that I’m aware of, maybe longer), to the point that there are pictures of the guy at chain confs from around then, and mentions of people talking with him in The Private Backrooms at said chain confs. it’s been his darling and he has wanted very, very hard to put it into tq (the main game server). see this for example (and fwiw, warning: eve reddit)

in-fill: there also appears to be quite a bit of cart before the horse element in how the company operates - they will frequently first work on something, then when it starts getting near release they’ll send out some surveys that almost without fail have some extremely loaded questions in them. an example would be that instead of asking players what they generally think of xyz feature/intended mechanic/etc, the survey will instead garden path answers along, attempting to manufacture consent/compliance.

and, last little detail: keep in mind this is a game where people will min-max the everloving shit out of something, and where a fair number of people out there are willing to trade actual time to making in-game money with which to fund their gametime (“plexing”). people who would be willing to engage with some really ridiculous abstract/effortful shit for whatever gains they could, just because they could.

so with that said, during 2021/2022 (in the middle of the NFT tsunami of shit) the first big round of “we want to add NFTs to tq” came about. and there were a fair amount of indications that ccp had already sunk quite a bunch of devtime on it, and were getting ready to roll it out. the pitch was, uh, “not well received” would be putting it extremely lightly. it was panned so fucking extremely, they had to put out this newsblog which included the remarkably tortured phrase “Not For Tranquility”

which is the early strand of what leads us to this particular little “gem”. it’s hard to get specific details because they’re fairly tight-lipped about internal processes and shit, so the following is definitely heavily conjecture. hilmar didn’t want to break up with his bae, and kept pushing trying to keep this alive, somehow. whether the drive for this is also tied up with the Pearl Abyss acquisition some years prior is unclear (but Black Desert Online players all cried wolf when PA bought CCP, and said to expect increasing financial fuckery). what does appear to be the case is that a number of developers (possibly the pro-NFT among them) got sequestered off to the Special Project that became this thing, along with the a16z money a while back. the general feeling in the eve:o community is still largely “get fucked”, and this project is likely to be double-stillborn (on account of dead kriptoes and an unwanted game/product)

I look forward in earnest to see just how dead it is on arrival

[0] - it took less than 2mo from the “would you like to play a fps in the eve universe? what would you want in it? what do you normally do in eve? what would you do in an eve-universe fps? why would you want your eve …” survey going out to the announcement “hey surprise! we have an fps!”[1]

[1] - again. they’ve failed a few times, with multiples out. ccp product leadership real bad.

Man this company has had some really interesting ideas and then the execution always falters.

I was still subscribed when the first eve-fps crossover they attempted. it seemed great and then for whatever reason a console exclusive with a subscription fee ontop. They didnt get the numbers they were planning for and the whole thing just died on the vine.

They’ve had some neat tech here and there and the whole experience is great for building out your psychopathy but i lost interest after the Greed Is Good phase of CCCP games started.

was that the one that targeted the near-eol console?

In 3 years they will release their NFT game.

Just discovered Patrick Boyle’s channel. Deadpan sneer perfection https://www.youtube.com/watch?v=3jhTnk3TCtc

edit: tried to post invidious link but didn’t seem to work

his delivery regarding the content is absolute perfection

The only invidious instance that works this week is nadeko:

Some killjoys pour cold water over everyone’s favorite dental biohack, and HN is not happy

Sometimes you read an article and you think “this article doesn’t want me to do X, but all its arguments against X are utterly terrible. If that’s the best they could find, X is probably alright.”

that thread is an unholy combination of two of my least favorite types of guys: techbros willfully misunderstanding research they disagree with, and homeopaths

this article doesn’t want me to drink a shitload of colloidal silver, but all its arguments against drinking colloidal silver (it doesn’t do anything for your health, it might turn you blue, it tastes like ass) are utterly terrible. If that’s the best they could find, drinking a shitload of colloidal silver is probably alright.

What a terrible argument. Anything that involves messing around with your teeth needs to have good reasons to do it, rather than just good arguments against doing it.

I’d think ‘we don’t know the side effects, it prob doesn’t work, and they are trying to sidestep the FDA’ would be good arguments against it. Esp after in the US Thalidomide (yes very much a dead horse), (mostly) wasn’t a problem because the FDA stopped it.

Anyway, it seems like the full scale FDA project stranded due to not enough volunteers, so I suggest the HN people mad about this help out. Hey, it might turn out to actually work.

Absolutely unhinged. Are these people from the As-Seen-On-TV dimension where it’s common for folks burn their house down every time they try to fry an egg?

From the comments: “Putting my conspiracy theory hat on, the dental hygiene industry in the US is for-profit, like the pharmaceutical, and would rather sell you a treatment than a cure.”

Have these people ever BEEN to the dentist? While I know that certain dental procedures (tooth straightening in kids, whitening, etc) are way overused in the US no dentist worth their salt will allow a check-up to go by without a stern lecture on preventing future trouble. And if they don’t do that then the hygienist most certainly will…

Here in Sweden the hygienist is definitely the Bad Cop in this scenario. I got sternly talked to by someone fresh out of school, so I don’t doubt there’s a retired Master Sergeant on the staff of the college they go to…

We could also just fluoridate the water supply, which also massively reduces cavities.

Despite Soatak explicitely warning users that posting his latest rant[1] to the more popular tech aggregators would lead to loss of karma and/or public ridicule, someone did just that on lobsters and provoked this mask-slippage[2]. (comment is in three paras, which I will subcomment on below)

Obligatory note that, speaking as a rationalist-tribe member, to a first approximation nobody in the community is actually interested in the Basilisk and hasn’t been for at least a decade. As far as I can tell, it’s a meme that is exclusively kept alive by our detractors.

This is the Rationalist version of the village worthy complaining that everyone keeps bringing up that one time he fucked a goat.

Also, “this sure looks like a religion to me” can be - and is - argued about any human social activity. I’m quite happy to see rationality in the company of, say, feminism and climate change.

Sure, “religion” is on a sliding scale, but Big Yud-flavored Rationality ticks more of the boxes on the “Religion or not” checklist than feminism or climate change. In fact, treating the latter as a religion is often a way to denigrate them, and never used in good faith.

Finally, of course, it is very much not just rationalists who believe that AI represents an existential risk. We just got there twenty years early.

Citation very much needed, bub.

[1] https://soatok.blog/2024/09/18/the-continued-trajectory-of-idiocy-in-the-tech-industry/

[2] link and username witheld to protect the guilty. Suffice to say that They Are On My List.

nobody in the community is actually interested in the Basilisk

But you should, yall created an idea which some people do take seriously and it is causing them mental harm. In fact, Yud took it so seriously in a way that shows that he either beliefs in potential acausal blackmail himself, or that enough people in the community believe it that the idea would cause harm.

A community he created to help people think better. Which now has a mental minefield somewhere but because they want to look sane to outsiders now people don’t talk about it. (And also pretend that now mentally exploded people don’t exist). This is bad.

I get that we put them in a no-win situation, either take their own ideas seriously enough to talk about acausal blackmail. And then either help people by disproving the idea, or help people by going ‘this part of our totally Rational way of thinking is actually toxic and radioactive and you should keep away from it (A bit like Hegel am I right(*))’. Which makes them look a bit silly for taking it seriously (of which you could say who cares?), or a bit openly culty if they go with the secret knowledge route. Or they could pretend it never happened and never was a big deal and isn’t a big deal in an attempt to not look silly. Of course, we know what happened, and that it still is causing harm to a small group of (proto)-Rationalists. This option makes them look insecure, potentially dangerous, and weak to social pressure.

That they do the last one, while have also written a lot about acausal trading, which just shows they don’t take their own ideas that seriously. Or if it is an open secret to not talk openly about acausal trade due to acausal blackmail it is just more cult signs. You have to reach level 10 before they teach you about lord Xeno type stuff.

Anyway, I assume this is a bit of a problem for all communal worldbuilding projects, eventually somebody introduces a few ideas which have far reaching consequences for the roleplay but which people rather not have included. It gets worse when the non-larping outside then notices you and the first reaction is to pretend larping isn’t that important for your group because the incident was a bit embarrassing. Own the lightning bolt tennis ball, it is fine. (**)

*: I actually don’t know enough about philosophy to know if this joke is correct, so apologies if Hegel is not hated.

**: I admit, this joke was all a bit forced.

Obligatory note that, speaking as a rationalist-tribe member, to a first approximation nobody in the community is actually interested in the Basilisk and hasn’t been for at least a decade.

Sure, but that doesn’t change that the head EA guy wrote an OP-Ed for Time magazine that a nuclear holocaust is preferable to a world that has GPT-5 in it.

Oh, that craziness is orthodoxy (check the last part of the quote).

Finally, of course, it is very much not just rationalists who believe that AI represents an existential risk. We just got there twenty years early.

This one?

nobody in the community is actually interested in the Basilisk

except the ones still getting upset over it, but if we deny their existence as hard as possible they won’t be there

The reference to the Basilisk was literally one sentence and not central to the post at all, but this big-R Rationalist couldn’t resist on singling it out and loudly proclaiming it’s not relevant anymore. The m’lady doth protest too much.